AI Beyond the Clinic: Labs, Telemedicine, and Consumer Healthcare

February 16, 2021 — 10 min read

It is no surprise that poor data quality and weak adherence to clinical protocols are synonymous with healthcare generally. In this article, we will explore why data engineering and clinical integration remain as major bottlenecks towards bringing AI tools to routine patient care. We will also discuss how working with limited data types and scope of services may enable more reliable AI development and deployment environments beyond the traditional point-of-care. In search of these environments, we will identify highly operational settings and map them across three levels in increasing distance from the clinic: the provider's own back-of-house, external service vendors, and direct-to-patient healthcare services. Finally, we will discuss how a consumer-first approach to healthcare may help make it more amenable to new technologies, and AI in particular.

Unstructured Data Silos & Protocol Deviations

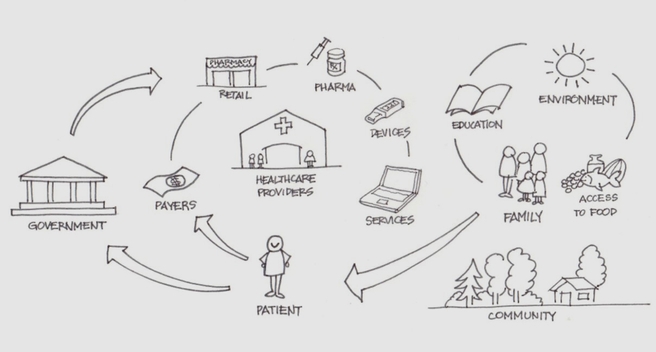

Healthcare systems are large and complex structures, both in terms of sheer number of components as well as the high levels of influence they exert on one another↗︎. Mismanagement of such complexity may lead to systems that are difficult to predict and healthcare that is challenging to deliver: patient-dissatisfaction, medical errors, and hospital-acquired infections to name a few consequences. While some geographies have national health and single-payer systems that may help reduce complexity, some of these issues still persist.

Healthcare: a complex system made up of multiple disparate components with diverse stakeholders and participants. Source: NEJM Catalyst (catalyst.nejm.org)

This complexity is naturally reflected in the system’s digital footprint i.e. data. Healthcare data of all sorts (administrative, claims, clinical..etc) is messy, sparse, and incomplete to say the least. Consider a given cancer patient’s clinical data. Much of it will lie scattered across the pathology lab where her biopsy specimen was analyzed, the radiology department where she got a mammogram, the radiation oncology center where she received radiotherapy, and many others. These are the “healthcare data silos” we read about everywhere. The system is so large that instead of scraping it and starting over, we are heaping complexity on complexity↗︎ by offering data janitoring as a service. A great example is Flatiron - recently acquired by Roche↗︎. They are able to generate value by aggregating cancer data and curating it - utilizing an army of mechanical turks who painstakingly go through the data and extract relevant aspects↗︎.

Clinical workflow is another area where this complexity manifests itself. A wide gap exists between clinical protocols and actual clinical practice↗︎. This is most evident in emergency care where protocol deviations are commonplace and urgency to treat patients takes priority↗︎. Primary and speciality care settings are also not immune to these issues. Medical professionals will often get interrupted by phone calls and pager pings as they perform certain tasks, and these have been associated with medication administration errors among others↗︎. Even patients themselves often have trouble adhering to recommended treatment regimens, negatively impacting the quality of care provided↗︎.

AI in the Clinic

Given this status quo, one can only imagine the amount of friction AI tools will face as they work their way into the clinic.

Friction happens first on the data front, and deep learning has only contributed to this. We’ve covered “expert systems” in a previous article - the predecessors to “AI in healthcare” as we know it today. These systems used machine learning methods that worked with relatively little data, but were tedious, proprietary, and required deep expertise. From a deployment standpoint, they had limited clinical utility, did not generalize very well to new patient populations, and ended up throwing off users when they failed. Today, these methods have been replaced with deep learning and brought along improved performance, an increased appetite for data, as well as over a dozen well supported and documented open-source libraries for model development and deployment↗︎.

In essence, deep learning has shifted the bottleneck from the methods to the data. In doing so, it made healthcare data engineering a core component of any clinical AI product. This engineering must deal with unstructured data. Most healthcare data are unstructured and that is where untapped value lies↗︎. Clinical notes are a prime example of this data: Inconsistent terminologies, shorthands, and abbreviations reflective of individuals’ training make the notes unusable as-is. The engineering must also aggregate data from multiple sources, as needed for the AI modelling problem, as well as address interoperability. No wonder that 60% of data science and machine learning work today is purely data curation↗︎.

Friction then happens on the clinical implementation front where weak adherence to clinical protocols presents major challenges. How can AI seamlessly integrate with an unpredictable and constantly changing clinical workflow. Technology in healthcare has been notorious for laborious data entry, irrelevant information overload, unactionable alerts, and unfriendly user experiences: Electronic health records (EHR) is a notable example↗︎. We currently can not claim AI will be any different. One can argue that integration today is more challenging than it was a decade ago given the bad reputation clinical expert systems have given AI tools generally, as well as the increased “digital clutter” and endless software that clinicians must interact with.

In the short term, successful clinical AI tools will be those deployed in the background - performing silent tasks with little to no interruptions to clinical workflow.

AI Beyond the Clinic

While discussions around “AI in healthcare” tend to focus on the providers’ front-of-house where clinical care is delivered, deploying AI in the background requires moving beyond this traditional point-of-care and onto other areas within the broader healthcare system. This can happen at three levels as we move further away from the clinic.

- Level 1: Provider Back-of-house

The first level lies within the provider walls but operates in the back-of-house. Examples of these include radiology reading rooms, labs, and other environments that are free from all the intricacies that come with patient interactions, scheduling, management..etc. Healthcare professionals in these settings are assigned specific tasks to be carried out on specific data types. This well-defined and limited scope of services provides stable conditions for AI model development and deployment. For instance, an AI model for identifying a specific abnormality in a CT image can run automatically right after image acquisition. Model results can then be presented alongside the image to the radiologist. Most AI studies and implementation efforts today operate at this level. - Level 2: Service Vendors

The second level goes beyond the provider and onto other organizations that do business with providers, collectively known as vendors. More specifically, vendors that provide services are very well positioned to utilize AI tools in their workflows. Examples of these include laboratory testing services for blood, tissue, and other clinical specimens. A highly operational lab with good control over protocols often enjoys high data cleanliness and workflow adherence levels. Another category here includes tele-health vendors that remotely help extend the hospitals’ capacity or scope of services. In a previous article, we looked at the growing demand for tele-pathology as a result of the COVID-19 pandemic. By outsourcing these services, we are effectively creating an isolated sandbox within which AI can be deployed and where risks can be more tightly managed. -

Level 3: Direct-to-patient Healthcare

The third level is where the traditional provider is replaced by direct-to-patient healthcare services that have recently grown in popularity and present a new mode of healthcare delivery. These come in the form of tele-medicine services that offer consultations through the web, especially for routine check-ups that can be administered remotely. We are already seeing AI-powered chatbots being used to triage tele-medicine patients prior to the virtual consultation (e.g. Ada↗︎, Buoy↗︎, and Babylon↗︎). Another category includes testing services on samples that are collected and sent in by patients themselves (e.g. Paloma for thyroid function tests↗︎ and Steady for diabetes monitoring↗︎, and Nurx for COVID-19↗︎). Similar to labs that work with providers, the operational nature of these services makes them ideal for AI interventions.

Tailwinds & Headwinds

While service providers at each of these levels may appear distinct, they have much in common. They offer a specialized and clearly defined scope of services. They operate at the periphery and do not provide care directly - at least not in the traditional sense. They do not interact with patients - at least not physically. Their day-to-day business operations (receiving information, processing it, and sending out results), combined with relatively clean data and high adherence to protocols, all make for a reliable AI development and deployment environment. Additionally, this assembly line-like process often comes with tight quality control and assurance standards. This may ensure safer AI deployments, especially in cases of misinterpretation by users or even complete failure.

That said, we must not forget that being highly operational can also translate to stifled innovation: Why change a system that works, especially one that is rigid and expensive to upgrade. In such a case, the value proposition of AI tools - whether cost savings, faster turnaround times, or quality improvement - must be significant enough to drive change. Data governance and privacy can also become bottlenecks if this data will be used to develop AI tools. While traditional providers are often regarded as “legal custodians” of their patients’ data↗︎, regulations for ownership and protection of patient data collected by 3rd party healthcare providers are unclear↗︎. Some legacy labs and tele-health companies - those that have not changed their business models for decades - may find difficulty in allocating the right resources and attracting the right talent to implement AI tools into their operations. This drives them to hire consultants or enter into strategic collaborations e.g. an equity partnership between Indian radiology AI startup DeepTek with Japanese tele-radiology company Doctor-NET↗︎.

AI in Consumer Healthcare

Direct-to-patient services are part of a much larger trend: consumer healthcare. It is no surprise that healthcare has one of the lowest customer satisfaction scores across many industries↗︎, and a consumer-first experience promises to change that↗︎. Such efforts tend to combine physical and digital channels into holistic patient experiences↗︎ through bedside, and now “webside” healthcare↗︎. Examples of these include membership-based primary care practices One Medical↗︎ (IPO in 2020), Forward↗︎, and Carbon Health↗︎.

From an AI application standpoint, this is perhaps the most appropriate context. Consumer healthcare products often claim to be “greenfield projects” i.e. starting from a blank slate without the constraints of existing systems. This entails rethinking healthcare delivery from the ground up, and thereby creates opportunities for implementing AI solutions from day one, or at least building the right provisions for future work. We often see a mismatch between modern AI products and outdated healthcare IT. For instance, offering a cloud-based AI service only to realize that much of healthcare data is still stored within hospital firewalls (on-prem). This mismatch is likely to erode with modern healthcare technology stacks that hopefully generate higher quality data out of the box. Relying on customer (i.e. patient) satisfaction and quality of care as performance metrics may drive greater adherence to clinical workflows, another key enabler of successful AI implementation.

Instead of attempting to tame a somewhat irrational and outdated system, a patient-first approach may help structure and modernize healthcare while making it more amenable to new technologies generally, and AI specifically.

Disaggregation of Healthcare

Narrow AI applications in healthcare today - those that perform a very specific task on a specific data type - require an equally narrow and well-contained context for successful implementation. It is more likely that these ideal contexts will exist beyond the walls of traditional providers. Despite increased consolidation in healthcare↗︎ (few large healthcare systems buying up smaller ones), we are also seeing the disaggregation of the hospital↗︎ with healthcare outsourcing on the rise↗︎. This may translate to more vendors, more specialized services, and more opportunities for AI applications to be bundled into these services. As for current efforts aimed at integrating AI products at or close to the point-of-care, barriers remain until a major system overhaul takes place.