AI is fundamentally reshaping synthetic biology as it enters its third decade. Once limited by slow and error-prone DNA design and assembly, the field now thrives on rapid, iterative engineering cycles that turn cells into programmable factories. With design and build no longer the bottleneck, the challenge has now shifted to data analysis, creating new opportunities for AI to drive discovery. In this article, we will explore how AI is enabling the design of molecules never seen in nature by linking sequence to structure and function, while navigating vast biological design spaces. We will then examine how startups are harnessing the power of AI-driven synthetic biology in drug discovery from optimizing antibodies to developing novel viral vectors for gene therapy. Finally, we will discuss how AI integration with wet lab experiments creates a powerful data flywheel effect contributing to an ever expanding knowledge base, as well as outline key challenges ahead.

Making Insulin in the Lab

Originally sourced from pig pancreases, the synthesis of insulin in 1979 by Genentech marked a major scientific milestone within the field of synthetic biology. By inserting the human insulin gene into yeast cells, scientists were able to encourage the production of insulin. In addition to addressing ethical concerns around animal welfare, making insulin in the lab allowed for cost-effective scale. Without synthetic insulin, an area larger than the surface of the earth would be needed today to raise pigs and harvest this critical protein to treat millions of diabetics around the world↗︎.

Synthetic biology is the systematic design and engineering of biology. The field is built around the introduction of engineering principles into biology through standardizing and abstracting biological components. Through a better understanding of the language of biology, akin to programming in computer science, the ultimate goal of synthetic biology is producing biological systems with predictable behaviors↗︎.

The ability to fluently read (sequence) and write (synthesis) this language promises to help tackle some of the world’s most pressing challenges. On the healthcare front, cell and gene therapies allow for correcting defective genes in patients while engineered proteins are developed into highly precise targeted therapies. Mosquito-borne diseases, such as Malaria and Zika, can be eliminated by designer mosquitoes with gene drives that short-circuit the usual patterns of genetic inheritance and prevent disease transmission↗︎. On the industrial front, engineering cells to a certain specification and rewiring their metabolism enables the production of almost everything we consume today from flavors and fabrics to food and fuels↗︎. Whether it is replacing oil and gas with biofuel fermentation or engineering bacteria to deliver nitrogen to plants and eliminate the need for fertilizers, many industrial processes are poised for a biological transformation.

The Cell as a Factory

Synthetic biology is centered around harnessing the cellular machinery and utilizing cells as tiny factories to produce biological material. The process starts with the design of genetic constructs that contain a gene of interest - chosen for its ability to encode a protein with desired properties or functions - along with regulatory elements such as promoters and terminators. The next step involves selecting host cells, with Yeast and E. coli being the most common. These cells are chosen based on a multitude of factors including ease of genetic manipulation, scalability, and protein secretion yield. While other approaches replace host cells with cell-free systems that remove genetic regulation and enable direct access to the inner workings of the cell↗︎, the overall process remains largely unchanged.

The genetic constructs are then delivered and inserted into the host cells through gene transfer methods (e.g. viral vectors) and gene editing (e.g. CRISPR-Cas9) among others↗︎. The genetically modified cells are then cultivated in a suitable growth medium containing all the essential nutrients, water, and feedstock required for protein production. Finally, the harvesting process involves isolating the target protein from the culture medium and disposing of waste including other metabolic byproducts and cellular debris. The purified protein can then be further processed for its ultimate use case, whether it be an antibody drug for treating cancer or an industrial enzyme for making cheese.

Design-Build-Test-Learn + AI

At the core of synthetic biology lies in the design-build-test-learn (DBTL) cycle, mirroring the traditional scientific method of hypothesis generation, testing, and learning, but tailored for engineering purposes. This iterative process starts with designing cellular manipulations to achieve a specific goal. This is followed by building or implementing these designs in the biological system. Next is the generation of experimental data through testing how closely the resulting phenotype achieves the desired goal. Finally, this test data is leveraged to refine future iterations and drive the cycle to the desired goals more efficiently than what may be accomplished by a random search↗︎.

DBTL cycles today are often trial-and-error processes primarily driven by our biological intuition and experience, and guided by ad-hoc practices. Given the complexity of biology paired with our incomplete understanding of cellular mechanisms, DBTL cycles face long development times and high likelihood of failure where the desired phenotype is never achieved. By capturing knowledge directly from high-throughput experimental data and predicting bioengineering outcomes, AI can play a vital role in enhancing the efficiency and accuracy of DBTL cycles. More specifically, the learn step is where AI can really shine by using data from the test step to inform the design step of the next cycle. In fact, it might as well be called the model step. The ultimate goal of tightly integrating AI models in the DBTL cycle would be to enable experiments at the massive scale of computational simulations, but with gold-standard real-life experiments↗︎.

Guiding experiments in a systematic fashion - without the need for a full or intricate mechanistic understanding of the biological system - is perhaps the greatest value AI can deliver to synthetic biology experiments↗︎. This comes along with improvements in speed, both in terms of cycle time as well as number of cycles required to reach the desired output↗︎. Insights from AI models can also help avoid involution: iterative trial-and-error leading to endless DBTL cycles that spiral into a state of increased complexity rather than increased productivity↗︎. AI can also help expand the toolkit available to conduct such experiments. For instance, Yeast and E. coli are the go-to hosts used in synthetic biology today often because they are the most studied rather than being the most optimal↗︎. AI, through techniques such as transfer learning, can adapt learned knowledge from yeast models to other so-called non-model organisms↗︎ including microalgae and fungi opening up the space for a wider range of possible products↗︎.

Unnatural Proteins

Through the statistical linking of inputs and outputs in flexible models capable of representing diverse relationships, AI can provide valuable insights into complex biological systems↗︎. However, the real promise of AI extends beyond understanding natural proteins as they exist today and towards the design of novel molecules. By exploring the vast space beyond what nature has sampled, we can unlock the potential for protein engineering to create entirely new functionalities never seen before in nature.

“The amount of sequence space that nature has sampled through the history of life would equate to almost just a drop of water in all of Earth’s oceans↗︎.”

We need to venture into nature’s uncharted territory. For many areas of biomedical research and drug development, there are no natural proteins that can serve as suitable starting points to build new proteins↗︎. Natural pathways may prove insufficient in scenarios where genes for product synthesis are unknown or where no natural pathway exists for desired biosynthesis. In such cases, designing novel non-natural pathways becomes necessary↗︎. Another example lies in gene therapy where efficacy is currently limited by the roster of naturally occurring vectors used to deliver the therapy↗︎. These vectors have not been optimized for disease treatment and are therefore unable to carry out the highly specialized functions we now ask of them.

The new generation of antibodies, enzymes, peptides, and other proteins will be designed and engineered - not discovered and screened. AI is bound to play a major role in this mindset shift by identifying new unnatural sequences and linking them to stable structures and valuable functions.

Sequence, Structure, & Function

Proteins, often described as biology’s actuators, play a critical role in various physiological processes within our bodies where around 20,000 are responsible for tasks ranging from digestion to oxygen transport↗︎. Their complex 3D structures are built up of a sequence of building blocks called amino acids. Protein sequences can be represented as strings of letters; The protein alphabet consists of 20 common amino acids↗︎. Fundamental to understanding their biological functions, the study of proteins involves decoding sequences of amino acids much like deciphering human language. However, the vast combinatorial space of possible protein sequences surpasses astronomical scales. For a relatively short protein of 50 amino acid length, there are over 1065 possible combinations while a 100 amino acid protein holds more combinations than the number of atoms in the observable universe. This makes the sequence space practically infinite.

Traditional protein engineering methods, often mimicking evolutionary processes, struggle with inefficiency due to the immense sequence space and reliance on experimental screening. One such method for exploring the sequence space is directed evolution or rational design where we start with a natural protein and mutate it until a desired function is achieved↗︎. Given the limitation of exploring infinitesimal regions of the sequence space around starting points, experiments may reach a local maximum or get trapped in a local peak and miss out on the global optimum↗︎. Because biology is intrinsically messy and non-modular, such approaches are often only slightly better than educated guesses↗︎. We need better means of sampling the sequence space.

Attempts at going beyond the natural protein neighborhoods have thus far been highly inefficient and artisanal in nature, relying heavily on humans for both experimental design and execution↗︎. When faced with the vast sequence space, most approaches rely on random high throughput screening and serendipity i.e. trial and error at a massive scale↗︎. This is where computational approaches are needed to drive experimental efforts, presenting an ideal proving ground for AI. In lieu of exploring the sequence space blindly, AI can help guide us towards meaningful sequence regions likely to produce proteins to our specifications↗︎. AI can also help fill in the blanks by more accurately interpolating between sampled points, and ultimately narrowing down the options before experiments bring molecules to life in the lab. Since it is impossible to brute force our way through the entire sequence space, AI can create a virtual fitness landscape to guide search away from nonfunctional sequence neighborhoods where proteins simply can not exist↗︎.

Exploring the Vast Sequence Space

Proteins are typically composed of reused modular elements including motifs and domains that can be assembled in a hierarchical fashion - akin to words, phrases, and sentences in human language↗︎. Given these similarities in both shape and substance, we are able to capitalize on major advances in natural language processing (NLP) to model proteins↗︎. The prior state of the art in NLP was recurrent neural networks. These operated sequentially on one word at a time and were not great at working with longer sentences; They would forget the context as they reached the end of the sentence. Then came transformer architectures in 2017↗︎, the methodology powering virtually all large language models today (ChatGPT, Claude..etc). Transformers rely on a self attention mechanism that assigns attention weights to words (or parts of a protein sequence) based on their surrounding context to help the model focus on pertinent information↗︎. This allows models to learn highly versatile, multipurpose, and information rich numerical representations of protein sequences - also known as embeddings - that can be used for any downstream task including protein structure and function prediction↗︎.

Another major advantage of using language models in protein modeling is their self-supervised nature. Labeled biomedical data is often difficult to come by. These models learn from the data itself by masking random portions of the protein sequence and then autocompleting them - essentially predicting an explicit ground truth. As such, language models are able to learn to speak protein↗︎ from raw sequences without labels, making it usable on any corpus at massive scale↗︎. This understanding of protein grammar enables a generative capability which in turn allows for writing entirely new protein sequences. It also greatly simplifies the protein design process where scientists can focus on creating a protein based on desired functions while leaving the sequence up to the model↗︎.

Moreover, AI methods for searching the sequence space can also be refined based on the DBTL iteration cycle. While earlier cycles may focus on exploration - trying new unexplored neighborhoods, later cycles lean more towards exploitation - concentrating on an already identified neighborhood and picking the best performers. Loss functions used in training AI models can be optimized for pure exploration, pure exploitation, or anything in between↗︎. This versatility makes AI a valuable tool in protein sequence space exploration.

Stable Structures

Now that we have identified a novel protein sequence, we need to confirm if it is indeed real. Can it fold in a stable well-defined structure? A protein’s sequence is inherently linked to its stable folded structure, which in turn dictates its functionality. Protein folding - a process driven by energy minimization - is a long standing problem with 10143 ways to fold, also known as Levinthal’s paradox.

Solving atomic structures remains an underdetermined problem with noisy data↗︎. It is a laborious and expensive process where multi year research projects focus on describing the structure of a single protein↗︎. These have traditionally relied on experimental techniques including cryo‐electron microscopy and X‐ray crystallography↗︎. While reliable, these techniques are time-consuming, resource-intensive, and come with a host of limitations due to the immense complexity of protein folding. They are also limited in terms of scale where the largest repository of experimentally verified protein structures, the protein data bank↗︎, has just over ~230k proteins - a drop in the ocean with respect to the vast sequence space.

While experimental methods remain as the gold standard, physics-based computational approaches emerged as an alternative. Software like Rosetta↗︎, attempted to simulate protein folding by calculating energy functions for each amino acid and their conformations to predict the most stable fold↗︎. However, due to the astronomical number of possible conformations, these approaches were computationally prohibitive, often requiring thousands of computers running for weeks to simulate a single protein’s folding pathway↗︎.

The field experienced a paradigm shift from physics to statistics with the advent of deep learning. Rather than simulating folding from first principles, these methods learn patterns from existing protein structures. This shift is rather significant as we can now make sophisticated inferences about the relationship between sequence and structure without an atomic level understanding↗︎.

The breakthrough came in 2018 when DeepMind’s AlphaFold outperformed traditional energy-based methods and dominated the CASP13 protein prediction competition↗︎, followed by AlphaFold2↗︎ (2020). While these earlier versions relied primarily on the attention mechanism described earlier, AlphaFold3↗︎ (2024) introduced a diffusion-based architecture where noise is gradually added and then systematically removed from molecular representations - similar to how AI image generators function e.g. Stable Diffusion, DALL-E ..etc. The model starts with a cloud of atoms and iteratively refines it over many steps until converging on the final molecular structure↗︎. This approach has allowed it to handle significantly more complex structural challenges with greater accuracy and confidence, particularly for proteins with limited evolutionary data.

In addition to AlphaFold, models like Boltz-2↗︎ now extend beyond proteins to predict DNA and RNA folding patterns. These models can also predict complex multi-molecular structures such as virus spike proteins interacting with antibodies and sugars, as well as binding interactions between proteins and various molecules. This capability opens new frontiers in drug discovery, where understanding how proteins interact with potential therapeutic compounds is crucial.

Useful Functions

Now that we have a stable protein with a well-defined structure, we must ensure it serves the intended function. Protein functions can be broadly categorized into four interconnected domains. The first category encompasses target affinity and biological function: how effectively proteins bind to their targets, their binding kinetics, specificity, and overall biological activity. These properties directly determine a protein’s therapeutic efficacy.

The second functional category addresses safety and immunogenicity concerns. Historically, the immune system hasn’t been receptive to novel proteins, creating a significant barrier for therapeutic applications. As such, the goal is to co-optimize for both immunogenicity and protein function simultaneously↗︎, and incorporate naturalness metrics that indicate whether a designed protein will possess desirable developability profiles and low immunogenicity↗︎.

The third category focuses on biophysical properties, including thermal stability, solubility, conformational dynamics, and resistance to degradation. These characteristics determine whether a protein can withstand the conditions it will encounter during storage, transportation and ultimate use↗︎.

The fourth category addresses manufacturability and developability. These are production metrics such as titer, rate, and yield that determine whether a protein can be produced at scale: crucial considerations for translating promising designs from the lab to the clinic.

Optimizing numerous protein functions simultaneously is a multi-objective challenge for which AI is very well suited↗︎, as optimizing one feature at a time will likely lead to improvements in one at the expense of others. Experimentally measured functions are crucial, as the most effective AI approaches for protein function optimization learn from experimental validation through tight integration with wet lab feedback loops. These approaches often involve creating custom scoring functions that assign different weights to various functional parameters, allowing researchers to navigate the vast design space efficiently↗︎.

Applications

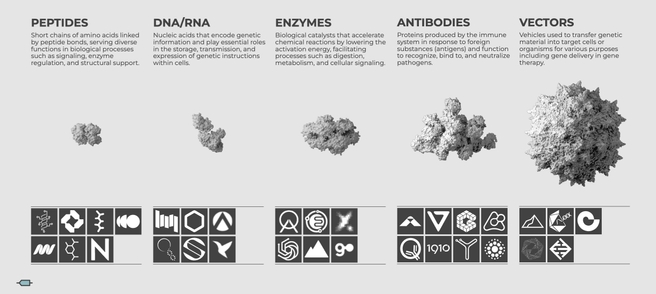

Having explored how AI can help design protein sequence, structure, and function, we now turn to the diverse applications of these technologies. The choice of chemical space to explore largely depends on what one is trying to optimize, whether it’s an antibody drug, an enzyme for industrial production, or a delivery vehicle for gene therapy.

Overview of AI-powered synthetic biology startups categorized by their primary focus areas and technological approaches.

Direct Applications: Protein Design

The most obvious direct application is the design of therapeutic proteins. Companies including Nabla Bio and BigHat Biosciences use AI to design and optimize antibody therapeutics↗︎ through predicting function from sequence alone↗︎. Menten AI capitalizes on quantum computing to unlock the immense parallelism needed to explore the peptide space↗︎. Natural protein discovery also benefits from AI approaches. Nuritas discovers bioactive peptides in natural food sources, identifying compounds with therapeutic potential that have evolved naturally over millions of years↗︎.

Indirect Applications: Enabling Technologies

Instead of developing therapeutics directly, other companies invest in the technologies and workflows that accelerate their creation. Aether Bio exemplifies this approach by developing enzymes for downstream biomanufacturing with a focus on process innovation↗︎. On the software side, Cradle↗︎ and Tamarind↗︎ are building computational tools and interfaces that help scientists design and optimize proteins for specific functions.

Specialized Approaches: Structure Prediction

Given the complexity of protein design, some companies focus on specific areas of the pipeline. In the structure prediction space, Gandeeva combines AI with cryogenic electron microscopy (cryo-EM) to accelerate structure determination at atomic resolution↗︎, an experimental technique that has taken over structural biology in the past few years↗︎. Others including Charm Therapeutics↗︎ are working on structural prediction of macromolecular configurations, specifically the co-crystal structure of a protein-ligand complex based on the protein’s primary sequence and the ligand’s chemical structure↗︎.

Beyond Protein Engineering: Parts, Small Molecules, and Diagnostics

The applications of AI in synthetic biology extend beyond protein engineering to other biological components, including predicting the function of biological “parts” such as promoters, ribosome binding sites, and untranslated regions↗︎. At the DNA/RNA level, companies like Octant and Hexagon Bio are applying AI to small molecule drug development. Octant’s discovery platform engineers human cells to act as reporters, turning on the expression of a genetic barcode if a particular drug target is activated↗︎, while Hexagon is mining fungal genomes and discovering evolutionarily refined small molecules alongside their protein targets↗︎. In diagnostics, Sherlock’s DNA/RNA detection platform uses synthetic gene circuits that can be programmed to distinguish targets based on single nucleotide differences↗︎.

Gene Therapy: A New Frontier

Gene therapy represents a particularly promising application area for AI and synthetic biology - the key components of which include the capsid (delivery vehicle), promoter (control element), and therapeutic transgene (genetic payload to treat disease).

Due to the inherent complexity, many companies take a horizontally integrated approach by focusing on one of these components. For many diseases, we often precisely know the genetic payloads we want to deliver, but do not always have the right vehicles to deliver them to relevant cells↗︎. Gene therapies have traditionally relied on naturally occurring adeno-associated viruses (AAV) to deliver payloads, but these face limitations in biodistribution, off-target gene expression, pre-existing immunity, and manufacturability↗︎. This has prompted the use of AI to design custom AAV capsids with specific characteristics, as demonstrated by Dyno Therapeutics↗︎, Apertura↗︎, and Capsida↗︎.

For instances where payloads are too large to be contained within AAVs, companies including Replay are focused on utilizing the large cargo capacity of other viruses - namely the herpes simplex virus (HSV) - to deliver big genes↗︎. Replay also boosts a hub-and-spoke model wherein the hub innovates and refines platform technologies in a centralized, scalable manner, while the spokes represent focused therapeutic development programs↗︎. AI plays a vital role in supporting such a model with both scalability: Enhancing the hub’s ability to rapidly iterate on platform technologies that all spokes benefit from, as well as cross-pollination: Insights from one spoke can help refine AI models used across others.

Compounding Value

The integration of AI models with synthetic biology wet lab experiments creates a powerful data flywheel effect where each experimental cycle contributes to an ever expanding knowledge base as more of the landscape is explored↗︎. Data becomes extensible over time enabling better models and predictions. Even failed experiments provide valuable information where strains that fail to achieve their objectives still contribute data that informs future designs. As such, the next strain that goes through the system will have a higher chance of success. This compounding pattern and increasing probability of success with each iteration is the hallmark of a true platform technology, creating enduring competitive advantages and distinguishing it from the traditional single product or “asset” approach↗︎.

Scaling Experimental Throughput

To fully leverage this potential, companies are rethinking how experiments are conducted and scaled as traditional well plates simply cannot generate data at the pace required to train sophisticated AI models. Companies like Sestina Bio (acquired by Inscripta) use microfluidics, microscopic droplets, and microchannels to miniaturize experiments↗︎, while Aether has invested in proprietary custom-built hardware and robotic systems to fit their scaling needs↗︎.

Approaches to Data Generation

Beyond scaling experimental throughput, companies are also exploring innovative approaches to maximize the value of biological data. EVQLV uses evolutionary modeling methods to optimize antibody design by mimicking natural selection processes. Starting from a given antibody sequence, their models computationally generate evolutionarily possible sequences with high affinity and lower likelihood of failure↗︎. ProteinQure is minimizing reliance on expensive experimental data through physics-based molecular dynamics simulations. By incorporating fundamental physical principles into their models, they can make more accurate predictions with less experimental data↗︎. Others including Generate Biomedicines↗︎ and Latent Labs↗︎ has taken a diversified approach, developing platforms capable of designing multiple types of proteins including antibodies, peptides, and enzymes. This strategy allows them to extract more value from each experiment by identifying generalizable principles that apply across protein families.

Headwinds

Despite these promising advances in AI-driven synthetic biology, several significant challenges remain ahead of these technologies reaching their full potential. These headwinds span technical limitations, scaleup challenges, and fundamental conceptual questions that must be addressed.

Technical Challenges

One of the most fundamental challenges for AI models in protein design is handling the extreme variability in sequence lengths ranging from 10 to 10,000 amino acids, complicating both standardized processing and model architecture design↗︎. This is exacerbated with longer sequences as transformer-based models scale quadratically with sequence length, making them computationally expensive for long protein sequences↗︎. This highlights the importance of capturing long-range interactions, which remains challenging with current architectures.

Unlike the static text in language models, proteins exist in multiple conformational states that are critical to their biological function↗︎. This dynamic nature creates a fundamental modeling challenge. Most structural data in the Protein Data Bank↗︎ represents stable conformations derived from experimental methods, creating an inherent bias in training data. Models trained on this data may struggle to capture the full conformational landscape of proteins, limiting their ability to design proteins with specific dynamic properties.

A significant portion of the proteome - estimated at 44% in eukaryotes and viruses - belongs to the “Dark Proteome”, which comprises proteins with no stable fold representing a well-defined three-dimensional structure↗︎. These intrinsically disordered proteins, which often contribute to defense and signaling pathways, pose a particular challenge for AI models trained primarily on structured proteins. Current models struggle with these proteins as we have very little structural data about them beyond their sequences. While models can predict where disorder occurs, designing functional disordered proteins remains largely beyond the capabilities of current AI methods.

While the protein research community excels at organizing competitions like CAFA for function prediction↗︎, CASP for structure prediction↗︎, and CAPRI for protein-protein docking↗︎, it lags behind other fields in establishing standardized benchmarks for model evaluation. Unlike competitions that occur at specific intervals, benchmarks are instantaneously accessible at any given point in time and therefore represent an important step toward accelerating progress in the field↗︎.

Scaleup Challenges

“Just because you have a bug that produces a gram per liter in a flask doesn’t mean you are ready to go commercial↗︎.”

One of the most significant barriers to commercializing synthetic biology products is scaling up production. Beyond engineering organisms that perform well in a laboratory reactor, they often need further tweaking to grow and thrive under pressure in steel tanks for large scale manufacturing↗︎. This transition from bench-scale to pilot-scale to full-scale involves understanding how numerous process variables (feed rate, pH, temperature, fermentation time, mixing regime, media composition, aeration rate ..etc) impact host physiology, cell growth, product titers, rates, and yields↗︎. This process remains largely heuristic, with scale-up development often seen as more of an art than a science↗︎.

Current AI models typically optimize for molecular properties but rarely account for manufacturability parameters critical for real-world deployment. This is primarily due to the lack of appropriate training data, despite modern fermentation systems containing sophisticated process controls and comprehensive data collection systems that could be leveraged for training AI algorithms. Capitalizing on the data generated by these industrial systems is key in building the next-generation of AI models that consider manufacturability alongside molecular function.

Conceptual Limitations: Is Protein Sequence Really Like Language?

A more fundamental question concerns the conceptual framework underlying many AI approaches to protein design. The analogy between protein sequences and human language, while useful, has significant limitations that may constrain progress↗︎. Unlike human language, proteins lack clear punctuation, stop words, and separable structures like words, sentences, and paragraphs. Specific words can have critical influence e.g. changing “love” to “loved” significantly alters meaning, while in proteins effects may be more aggregate e.g. altering the overall hydrophilicity of a region. Additionally, proteins form complex three-dimensional structures, a phenomenon that has no direct analog in natural language↗︎.

As the field advances, researchers are increasingly recognizing the need for multimodal approaches that unify sequence, structure, interaction data, and experimental measurements. These include architectures that integrate spatial geometry and learn from evolutionary constraints. In essence, we are attempting to treat proteins less like language and more like physical, functional systems embedded in complex biological contexts↗︎.

The Future of AI in Synthetic Biology

Democratization of AI Models Accelerates Innovation

Unlike previous computational biology tools that required specialized expertise, today’s most sophisticated AI models are increasingly accessible to non-technologists with virtually no barriers to entry. For instance, setting up and running Rosetta required significant technical expertise. Contrast this with running AlphaFold in a notebook bypassing the need to write a single line of code, download software, set up local environments, and more importantly avoiding command line intimidation↗︎.

This democratization also enables the community to build upon and extend models. A few days after the release of AlphaFold 2, users reported a trick on Twitter that allowed the model to predict quaternary structures - something which few, if anyone, expected the model to be capable of↗︎. Platforms like Hugging Face have further accelerated this trend by creating open-source communities where models can be shared, modified, and deployed with minimal friction. This collaborative ecosystem has enabled rapid iteration and innovation across the synthetic biology landscape.

The Mini-Biologics Opportunity

The growing importance of biologics presents a compelling opportunity for AI-driven synthetic biology. In 2024, biologics have grown to represent a third of all FDA drug approvals↗︎, while making up 7 of the top 10 drugs by revenue↗︎. Despite this growth, current AI approaches remain more established in small molecule discovery↗︎. This creates a significant opportunity to redirect AI efforts toward biologics, particularly as the FDA continues to approve more biological products.

While large biologics like monoclonal antibodies dominate current markets, their complexity suggests that AI approaches may find more immediate success with smaller proteins and peptides. These molecules, typically around 30 amino acids in length, present a more manageable search space for AI models, making sizable coverage of the chemical space a somewhat attainable goal↗︎. More specifically, naturally derived macrocycles and engineered constrained peptides have evolved to bridge the gap between small molecules and larger biologics by transcending the traditional boundaries of the cell to access deep, complex targets with high specificity. This has made them one of the fastest-growing categories of new therapeutic products and an ideal target for AI-driven design approaches↗︎.

Biology as Inspiration for New AI Techniques

Perhaps most intriguingly, the relationship between AI and biology is not unidirectional. Just as AI is transforming biological research, biology itself continues to inspire new approaches to machine learning. This suggests that a deeper understanding of biological systems through synthetic biology will drive the development of novel AI architectures and approaches↗︎. After all, biology has inspired staples of AI including neural networks, genetic algorithms, and reinforcement learning among many others↗︎.

“The complexity of biological systems is such that AI solutions based purely on brute-force correlation finding will fail to efficiently characterize the system’s intrinsic features↗︎.”

As trends discussed here continue to unfold, we can expect a new generation of AI-designed proteins that address previously intractable challenges in medicine, agriculture, and industrial biotechnology, ultimately transforming not just how we design biological systems but how we understand and interact with the living world.